Calculate the size of the log store your project requires. Correctly sizing your log store is important, as stores that are too small or large can lead to performance issues.

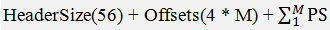

You will start this procedure by calculating your project’s internal record size. An internal record represents a row in an Event Stream Processor window. Each row contains a fixed-size header plus a variable-size payload containing the column offsets, column data, and any optional fields. Use this formula for the calculation in step 1:

In the formula,

- M represents the number of columns

- PS represents the primitive datatype size for each of the M columns

Primitive datatypes are the building blocks that make up more complex structures such as

records, dictionaries, vectors, and event caches. This table gives the size for

datatype.

Note: Guaranteed

delivery (GD) logs

hold

events stored for delivery. If no GD logs

are

stored in the log store, you have the option of skipping step 1, step 2, and step 3. Instead, compute the dataSize using the

Playback feature in Studio or the esp_playback utility to record and

play back real data to get a better idea of the amount of data you need to store. (See

the Studio Users Guide for details on Playback or the Utilities

Guide for details on esp_playback.) The log store reports

“liveSize”

in the server log when the project exits (with log level three or higher) or after every

compaction (with log level six or higher). Use the

“liveSize”

value for the dataSize referenced in step

2

and beyond.