Event Stream Processor clusters are designed for simplicity and minimal need for interaction from administrators once started.

A cluster consists of one or more nodes. Single-node clusters provide a convenient starting point from which to build and refine multinode clusters.

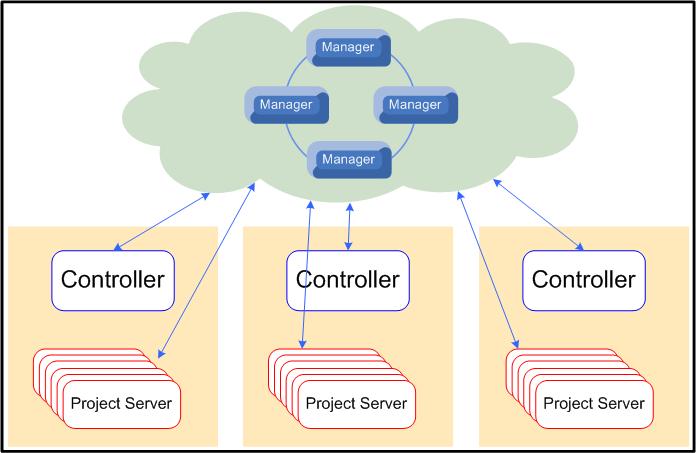

Nodes are processes that run on hosts. There are two functional types of nodes: managers and controllers. A manager is an external access point to a cluster. Managers maintain and monitor cluster and application state. A controller is responsible for starting and monitoring the processes that run projects (project servers).

A single-node cluster refers to a cluster with a single manager node (which functions as both a manager and a controller). In development and test environments, a single node cluster may be sufficient. You can deploy several projects to a single-node cluster that monitors project status and, if the project deployed had failover configured, restarts failed projects. However, as you develop and refine your Event Stream Processor environment, the demands on your cluster grow. You can therefore expand your cluster to include additional nodes and, if necessary, additional clusters.

When you have multiple manager nodes in a cluster, it is called a multinode cluster. In a multinode cluster, all manager nodes are considered primary, so there is no single point of failure in the cluster. However, if you configure only one controller for multiple managers, the controller can become a single point of failure.

When a project is deployed to a cluster, it maintains a heartbeat with one of the managers in the cluster. If the manager node detects missed heartbeats from a project for too long, it assumes project failure and issues a STOP command. If the project had failover configured, the manager restarts the project. For example, if your CPU utilization is operating at 100 percent, the project server may not be able to send heartbeats to the cluster manager, which stops the project. In multinode clusters, the manager responsible for monitoring a project might not be the manager through whichthe project is deployed.

All the manager nodes in a cluster store project information in a shared cache. If a manager node starts a project and subsequently fails, the shared cache enables any other manager in the cluster to take over management of the failed manager's projects.